When your AI vendor asks you to click "I agree" on someone else's terms

The Anthropic integration raised a question we weren't expecting: who exactly are we doing business with?

A few weeks ago, we started our weekly meeting the way most people in our field did that week: talking about Anthropic. Microsoft’s announcement that Claude models would be available in Copilot Studio and for Researcher-agents wasn’t just another feature drop. It marked the first time a non-OpenAI model entered the Copilot ecosystem, and with it came something unexpected: a terms and conditions checkbox.

The question landed in our group chat almost immediately. If the model runs in Microsoft’s datacenter, under Microsoft’s infrastructure, why are we accepting Anthropic’s terms? It’s a small thing, a single setting that admins can toggle on or off. But it opened a conversation about what happens when the AI platform you’ve committed to becomes a marketplace of models, each with its own legal framework.

The terms of the arrangement

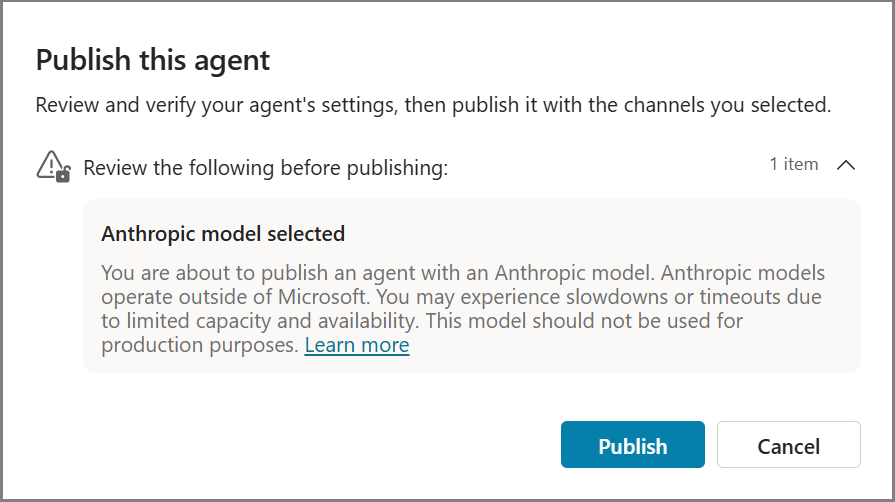

The implementation is straightforward enough. The Anthropic integration sits behind an admin control, off by default. You flip the switch, your organization accepts the terms, and Claude becomes available alongside GPT models. According to the documentation we reviewed, you’re explicitly agreeing to Anthropic’s terms and conditions, not Microsoft’s extended coverage of a third-party service running on their infrastructure.

This caught us off guard. One of us pointed out the apparent contradiction: “I have an agreement with Microsoft, not with OpenAI either. So what’s the difference?” It’s a fair question. When we use GPT-4 through Copilot, we’re not separately agreeing to OpenAI’s terms. We assumed the same model would apply here. But it’s not:

“Anthropic models are hosted outside Microsoft and are subject to Anthropic terms and data handling, which need to be reviewed and accepted before makers can use them. These models are available before an official release so that you can get early access and provide feedback.” (source)

The most plausible explanation we could land on is that this functions more like a plugin or connector. Just as using a third-party app in Microsoft 365 requires accepting that vendor’s terms, accessing Claude routes through Anthropic’s agreement.

What this signals about platform strategy

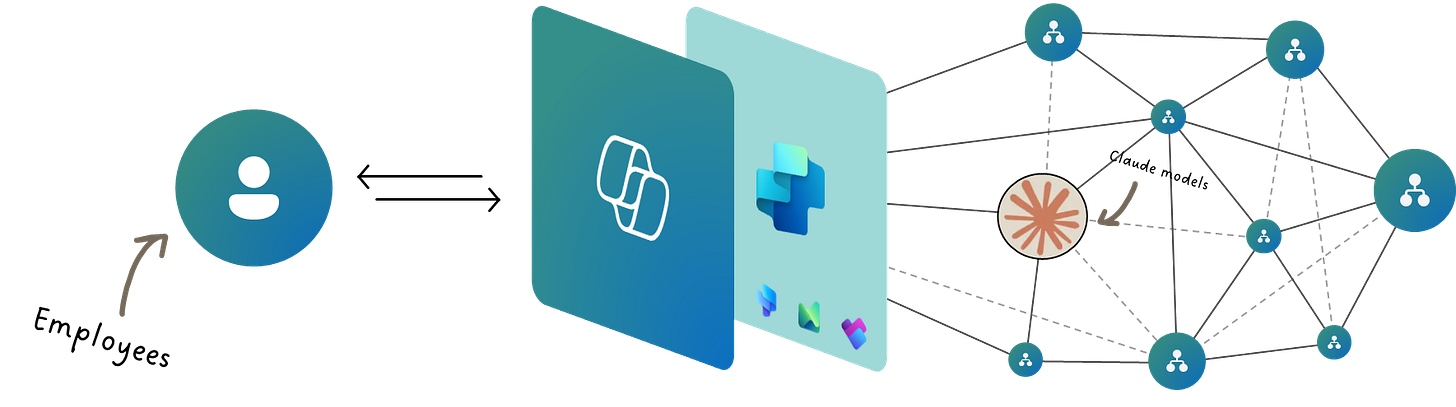

On one hand, we see this as genuinely positive. Microsoft looking beyond OpenAI suggests they’re building a true platform, not just a branded wrapper around a single vendor. It reduces dependency risk and acknowledges that different models excel at different tasks. The implications are significant: if Anthropic is first, Google’s Gemini could be next, or any other frontier model that proves its value.

This aligns with Microsoft’s stated “Copilot: the UI for AI” positioning. In practice, this matters because the GenAI ecosystem won’t be a monoculture. Makers will need flexibility to choose models based on use case, cost, or capability. From that perspective, this integration opens doors.

But here’s where it may get complicated. If Google arrives with Gemini, do we then accept Google’s terms? What about model from X, Grok? Can we selectively enable some and block others, or is it all-or-nothing at the tenant level? One of us raised the legal angle: how many model agreements will legal teams need to review, and how often will they change?

The questions we’re left with

We walked away from this conversation with more questions than answers, which honestly feels about right for where the industry is. The technical implementation seems solid, the strategic logic makes sense, but the operational reality for IT and legal teams is still forming.

At its core, this is a preview of the multi-model future. Most organizations have built their AI governance frameworks around a single vendor relationship with Microsoft, which in turn had a single (if complex) relationship with OpenAI. That simplicity is ending. The next phase requires thinking about model providers as a portfolio, each with its own risk profile, terms, and capabilities.

For now, the Anthropic integration is opt-in and clearly signposted. That’s a good start. But as we said in the meeting: we’re about to be “waterboarded with new features.” The pace won’t slow down, and each new model that arrives will bring this same question: who are we actually agreeing to work with, and what exactly did we just accept?